I wanted to explore how far I could go with a fully local AI agent using Retrieval-Augmented Generation (RAG). As a small curiosity-driven evening project, I decided to build an agent that can answer questions about airline reviews — entirely offline, fast, and inexpensive.

This article walks through the idea, tools, and architecture behind the project, with minimal but practical Python code.

Tech Stack Overview

Here’s what I used:

Language: Python

LLM runtime: Ollama

Models:

llama3.2 for question answering

mxbai-embed-large for embeddings

Vector store: Chroma

Libraries:

langchain

langchain-ollama

langchain-chroma

pandas

Dataset: Airline Reviews (CSV) from Kaggle

Why Ollama?

I installed Ollama on a Linux cloud server, but one of the nicest things about it is that it also runs smoothly on most modern PC and Laptops.

Ollama made this project:

Easy to run locally

Cheap (no API costs)

Fast enough for experimentation

Perfect for side projects and learning.

Dataset Preparation

The dataset comes from Kaggle and contains airline reviews in CSV format.

To make vector ingestion faster and lighter:

I created a reduced CSV version

Kept only the columns relevant for semantic search (review text, airline name, rating, etc.)

This significantly improved:

Embedding generation time

Vector store loading speed

High-Level Architecture

The flow is straightforward:

Load airline reviews from CSV using pandas

Generate embeddings using mxbai-embed-large

Store vectors in Chroma

Retrieve relevant reviews for a user question

Pass retrieved reviews + question to llama3.2

Generate an answer strictly based on retrieved content

This is classic RAG but fully local.

Prompt Design

I kept the prompt explicit and restrictive to avoid incorrect information:

You are an expert in answering questions about airline reviews.

Use the provided reviews to answer the question as accurately as possible.

Here are some relevant reviews: {reviews}

Here is the question to answer: {question}

IMPORTANT: Base your answer ONLY on the reviews provided above. If no reviews are provided, say "No reviews were found."This single instruction already improved answer reliability a lot.

Minimal Python Setup (Conceptual)

The code is intentionally basic and readable:

Load CSV with pandas

Create embeddings with Ollama embeddings

Store & query vectors with Chroma

Chain retrieval + LLM with LangChain

I avoided over-engineering — the goal was clarity, not abstraction layers.

Example Results

I tested the agent with different types of questions.

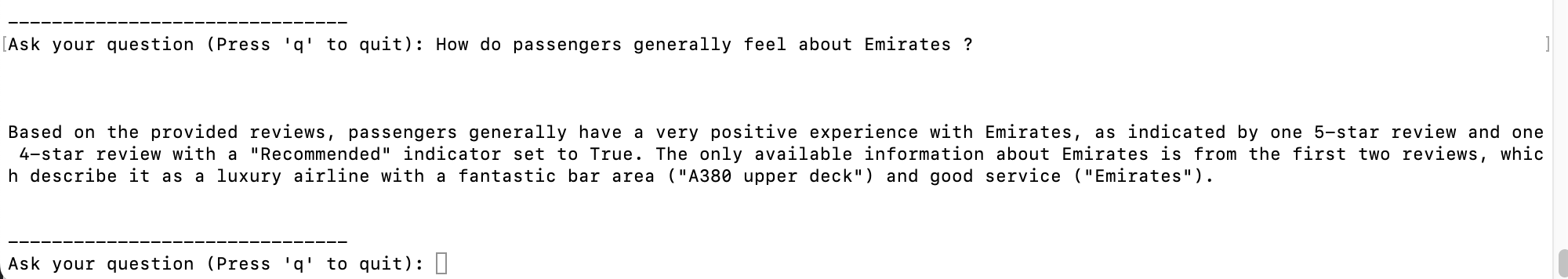

✅ Example 1: Valid Question

Question:

How do passengers generally feel about Emirates ?

Result: The agent retrieved multiple relevant reviews and summarized them correctly.

❌ Example 2: No Relevant Data

Question:

Is Honda CRA a good SUV car ?

Result: Since no relevant reviews were retrieved, the agent responded:

No reviews were found...

This behavior is exactly what I wanted — no incorrect information.

GitHub Repository

The full source code is available here:

👉 GitHub Repo: local-AI-agent-RAG

You can also check it out and run it on your own machine by following the instructions provided in the README file.

Final Thoughts

This project was mainly built out of curiosity and for fun — a way to experiment with local RAG systems without overcomplicating things.

That said, the same approach can scale much further. With a larger dataset and more performant hardware, you can build something significantly faster, more accurate, and production-ready.

For now, it serves as a solid proof of concept and a reminder that meaningful AI projects don’t always need massive infrastructure to get started 🚀